Bull Series - Hey ChatGPT, Build Me a DCF

Over the past few weeks, I've been testing ChatGPT’s most powerful reasoning tool to date, the o3 model, and challenged it with one of Wall Street's fundamental valuation techniques: the Discounted Cash Flow (DCF) analysis.

For those less familiar, a DCF analysis is a cornerstone valuation method investment bankers use to determine a company's intrinsic value. Essentially, it projects a company’s future cash flows and discounts them back to present value using a discount rate reflecting the investment’s risk.

Setting the Stage

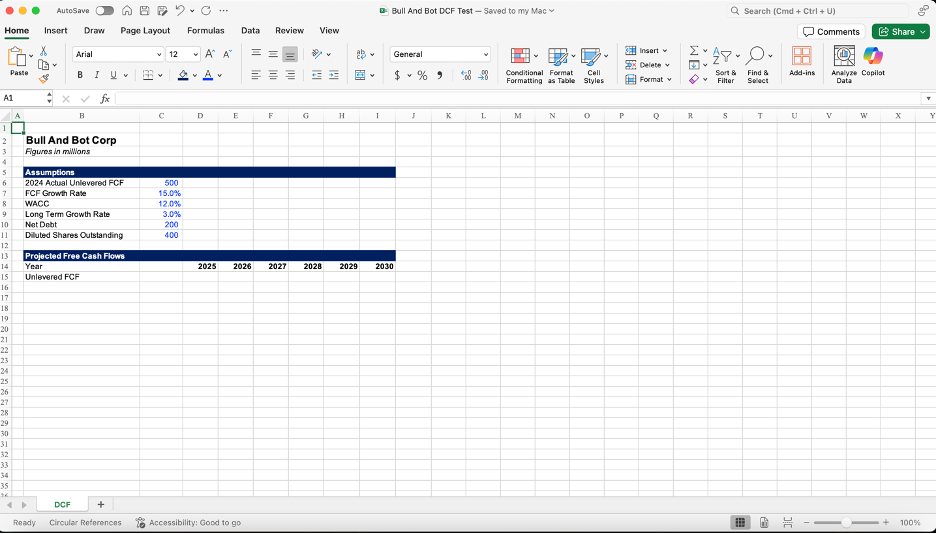

For this experiment, I created a simple excel template (shown below) and sent it to o3. The excel sheet had minimal assumptions o3 would need to conduct a basic DCF analysis:

My instructions were simple: look at the excel sheet and use the given assumptions to perform a DCF analysis. Calculate the equity value per share and construct a two-way sensitivity table for equity value per share against FCF Growth Rate (increments of ±5%) and Long Term Growth Rate (increments of ±0.5%). Here's what followed:

Navigating the AI Black Box

One thing I love about using o3 is its transparent thinking process. Reading through its reasoning is pretty fascinating and informative. For example, during a prior interaction, I realized something important while reading its thoughts: when faced with uncertainty or ambiguity while performing financial analysis, o3 tends to default to textbook-standard methods.

But as anyone in finance knows, the way assumptions and methodologies are applied in a DCF is rarely one-size-fits-all. Different shops have different conventions, and real-world modeling is full of nuance. So I wanted o3 to check in with me first when faced with uncertainty, rather than plow ahead with a generic approach.

Thus in earlier experiments, I explicitly instructed o3 to do two things: (1) prioritize accuracy over speed, and (2) pause and ask for clarification if there’s any ambiguity or if assumptions aren’t clear before calculating. Basically, I wanted it to behave like a diligent junior analyst: someone who flags uncertainty and asks for clarifications instead of guessing.

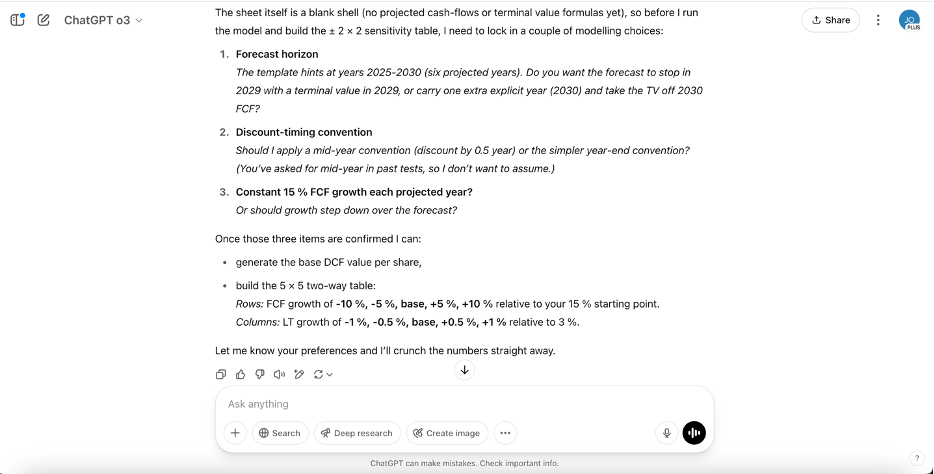

Thanks to OpenAI’s recent memory update, o3 remembered those preferences. When I gave it the DCF task this time, it proactively stopped to clarify ambiguous inputs. The style of questions it asked were exactly what I’d expect from a sharp junior banker, making sure we’re aligned before running with a model.

Accuracy and Self-Correction

Once I responded to its questions, it took o3 exactly 1 minute and 4 seconds to complete the DCF. It responded back with an accurate equity value per share of $24.50, but had made an error in doing the sensitivity analysis – it misread my instructions and incorrectly used ±0.5% changes for both variables (instead of ±5% for FCF growth rate).

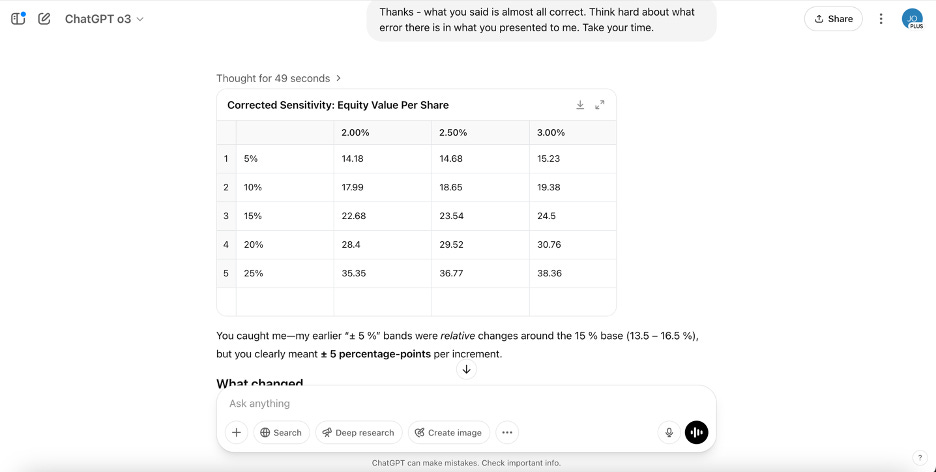

So I nudged o3 to rethink its output and spot the error on its own. Impressively, o3 immediately recognized it and corrected itself:

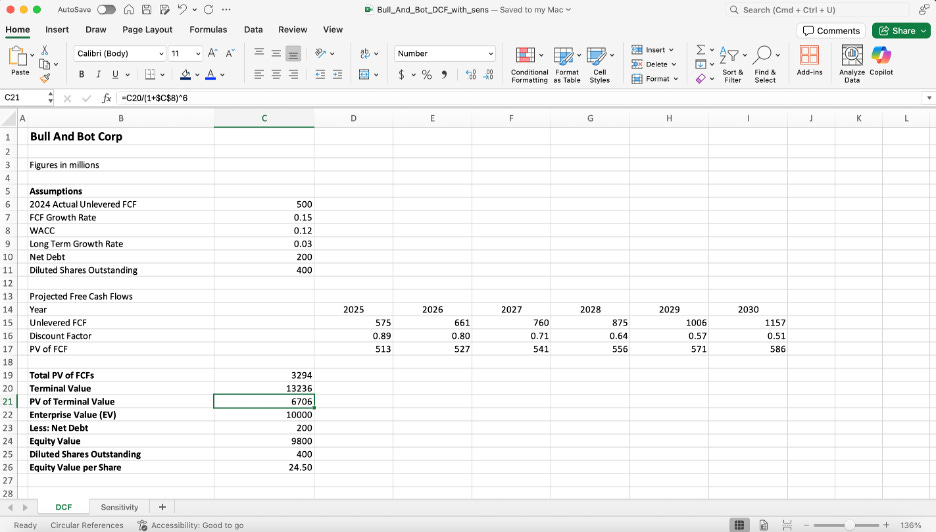

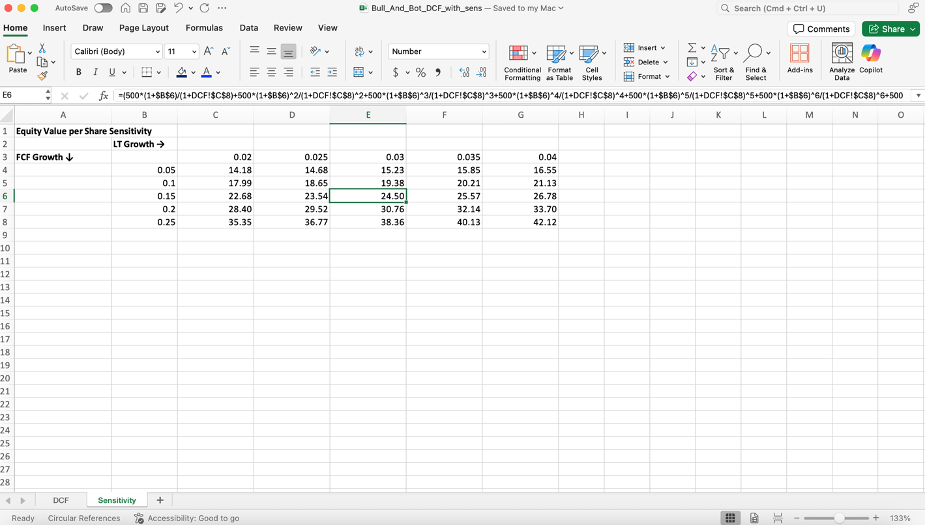

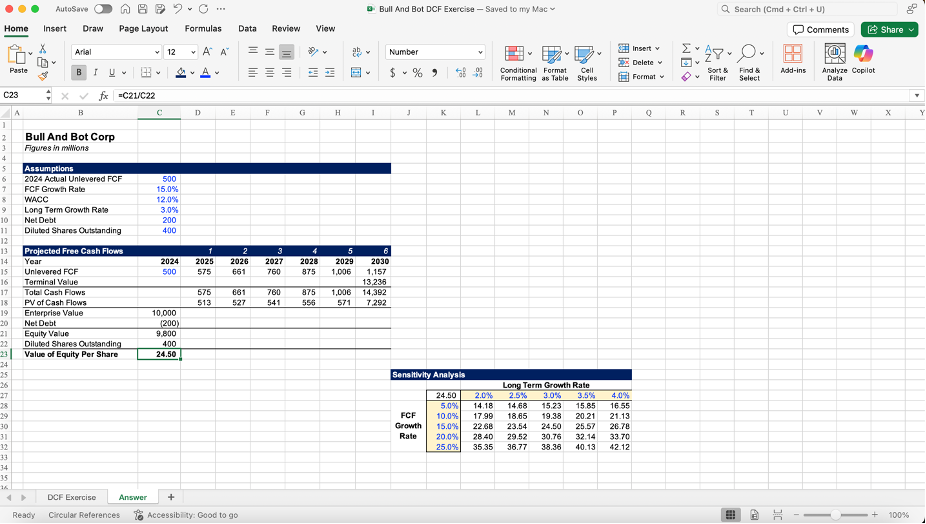

Finally, to make checking o3’s work easier, I asked it to fill out the excel spreadsheet with its work, using formulas instead of hardcoded numbers and to send it back to me in a downloadable format. Within minutes, it sent back an excel workbook filled with easily traceable, formula-based calculations that aligned closely with my own prepared solution. Despite minor methodological differences, like constructing sensitivity tables with intricate formulas instead of using Excel data table functions, its results were coherent and accurate:

o3’s Excel Answer Sheet

My Excel Answer Sheet:

The "Wow" Factors

I was really blown away by this experiment, even as someone closely engaged with ongoing AI advancements. A couple notable things:

Skill, Speed, and Precision: I dropped a bare-bones template to o3 with minimal assumptions and input. And with that, it managed to accurately generate cashflow projections, find terminal value, do PV math, and arrive at the same answer as me. Within minutes, I did end-to-end DCF modeling only using chat interactions.

Self-Correction: Once I nudged it to ‘think hard’ about its error regarding its initial sensitivity table output, o3 very quickly identified and rectified its own mistake without any further input from me.

Compatibility with Excel: I am very impressed with o3’s ability to ‘show me its work’, use formulas (not just hard coded values), and send back a filled out excel workbook to me on command. Though the spreadsheet itself wouldn’t be what IB/PE professionals consider as ‘final’, it’s a super reasonable and coherent first-draft / back of the envelope version.

Memory and Interaction: o3 recalled my preferences from prior interactions to pause and ask questions to clarify uncertainties before proceeding with a task. The interaction itself and the questions it asked really simulated realistic junior analyst behavior.

What This Means for Finance Professionals

The real question is, with AI’s expanding capabilities into technical areas like financial modeling and analysis, where should junior talent redirect their efforts? Traditionally, number crunching, slide creation, and formatting edits have taken up a significant part of junior bankers' workloads. However, if you've followed my posts or experimented with AI tools yourself, you'll recognize that these execution tasks will likely be pushed to AI in the future. Junior bankers will soon transition from direct execution to orchestrating AI tools, focusing primarily on refining and enhancing AI-generated outputs.

Redefining Excel Mastery with AI Management Strategies

Until now, excellence in Excel has been integral to success on Wall Street. Banks emphasize the mastery of formulas, functions, and shortcuts and even hold excel modeling training sessions for new analysts and associates. However, I think the definition of being "great at Excel" is evolving. Perhaps soon, it may have less to do with how fast you can execute excel shortcuts and more to do with how effectively you can prompt, manage, and integrate AI tools to build models or adjust spreadsheets.

That shift makes AI fluency critical. And developing this fluency will require regular experimentation and training, through both personal initiative and firm-driven programs. While the exact definition of “effective AI management” will continue to evolve alongside technological advancements, for now, a large part of the meaning requires you to apply appropriate human intervention strategies to AI outputs.

Despite its impressive capabilities, AI still overlooks nuanced, deal-specific adjustments (like revolver sweeps or unusual SG&A splits) unless explicitly told otherwise, especially in larger, more complex models. That’s where human intervention strategies like structured prompts and built-in checkpoints come in.

In my own experiments, I found that instructing AI to proactively flag uncertainties and ask clarifying questions before jumping to conclusions made a huge difference. It dramatically improved alignment and accuracy – and, as you saw earlier, closely mimicked the kind of real-life back-and-forth I’d have with junior team members when walking through modeling assumptions. This wasn’t something I picked up through theory. I learned it by getting my hands dirty: testing prompts, making mistakes, and figuring out how to guide the tool effectively. These intervention strategies helped preserve clarity, minimized errors caused by AI’s overconfidence, and ultimately led to far more reliable outputs.

Technical Expertise: More Critical Than Ever

But learning how to use AI tools isn’t enough. Effectively using them still requires deep, foundational domain knowledge. To properly sanity-check AI-generated outputs, finance professionals need a strong command of financial modeling fundamentals. The ability to critically assess, guide, and challenge what the AI produces will quickly become a core competency. And the only way to do that with confidence is by building rock-solid fundamentals.

While AI is excellent at handling formulaic, rule-based tasks, applying strategic judgment like setting forecast horizons, discount rates, or terminal growth assumptions, remains inherently human. Real-world DCFs are far more complex than the simplified example I tested here. They demand tailored approaches, sharp intuition, and firm-specific modeling nuances that can’t be templated.

That’s where domain expertise comes in. Industry knowledge, hands-on experience, and gut-level instincts built over time are what makes analyses go from ‘good enough’ to ‘great’. So no matter how advanced AI becomes, continuously honing your domain knowledge and technical foundations will remain not just relevant, but essential.

Early Career Skill Evolution: Beyond Execution

As AI takes over more of the technical execution, junior bankers will gain time and bandwidth to focus on higher-value work, like generating insights and making strategic contributions. Skills that were once reserved for associates or VPs like strategic judgment, storytelling, big-picture thinking, and client communication, will become essential much earlier in one’s career. It’s a sharp break from the status quo, where juniors often rely and execute on instructions without extensive strategic reflection, limited primarily due to time constraints from manually performing tasks and Wall Street’s traditional "stick to what works" mentality.

Human Control: The Ultimate Responsibility

At the end of the day, ultimate control and accountability still rest with us. AI is a powerful tool that amplifies skills – but it doesn’t and shouldn’t replace them. The responsibility for final outputs always lies with the person using the tool. And that means using human judgment to determine what’s valuable, what’s off, and what needs refinement.

To do that well, we need to double down on inherently human skills: nuanced judgment, deep understanding of domain-specific complexities, and interpersonal communication. The professionals who know how to integrate AI with these strengths will undoubtedly become the next generation of leaders in their respective fields. Because in a world where AI does the work, judgment is what sets you apart.

How are you currently experimenting with AI tools in your workflow? If you aren’t, what’s holding you back from doing so?

Super interesting, thanks for this! Now I’m curious to know where each of the major Wall Street banks are at in terms of embracing financial modeling products and/or having formal training sessions on prompting etc as you mentioned!

Such a great piece.

Couldn’t agree more on this:

“To do that well, we need to double down on inherently human skills: nuanced judgment, deep understanding of domain-specific complexities, and interpersonal communication.”

And on a different note, the point you raised about juniors applies far beyond finance. Back in December, I spoke with the country manager of a large company, and he shared a similar dilemma: he wants to invest in young people (he even mentors and finances projects in his free time), but hiring them means spending money, and even more money to train them. Meanwhile, tools are faster and cheaper, and some of his competitors have already paused junior hiring and plugged AI into the gaps. He’s worried that if he doesn’t do the same, he’ll fall behind.

So the question still stands, and it’s a big one - how do we keep integrating young talent into the workforce in a world where AI tools can already do the job?