Bot Series - Cost Shapes Architecture: A Cadence Rule for Multi-Agent Workflows

This post builds on my earlier piece where I introduced my 5-agent Substack Notes Generator workflow. If you missed it, you can read that background here.

Over the last few weeks, I used Make.com to build a multi-agent workflow that creates, logs, and emails me daily Substack Notes ideas. The system runs across five agents, each with a specific role.

Before we dive deeper, here’s a quick visual of the workflow’s architecture as reference:

When I started building this Notes generator, I quickly realized that the challenge wasn’t so much in creating the agents themselves. Rather, it was in orchestration: deciding how much each agent should handle, how they should interact, and how often they should run. And it was that last piece – cadence – that shaped not just how well the system worked, but also how much it cost to run the workflow.

Two of the earliest agents I built illustrate this idea clearly:

Agent 1: Style Analyzer. It ingests a Master Text File (“MTF”) of all my long-form Substack posts to date and makes API calls to GPT-5 to generate a style guide that captures my voice, thought process, and content focus. That style guide then feeds into Agent 3, which also uses GPT-5 through API calls to synthesize the inputs and generate new ideas.

Agent 2: News Sourcer. It runs two HTTP modules inside Make.com to pull fresh AI and finance headlines via NewsAPI calls, organizes them, and passes them to Agent 3 as inputs. Agent 2 runs every day, since news changes daily and I wanted the workflow to produce Notes options that refer to real-time occurrences.

At first, it seemed logical – and architecturally simple – to run both agents daily, since Agent 3 depends on inputs from both Agent 1 (style guide) and Agent 2 (news). But I quickly realized that Agent 1 and Agent 2 each work with very different types of data - and that difference created the first real design-cost tradeoff.

The Problem: Misaligned Cadence = Wasted Cost

Agent 2’s inputs change constantly, so a daily trigger made sense. However, Agent 1’s source file, the MTF, only updates when I publish a new Substack post, which is usually two or three times a month.

Because I chose to run Agent 1 on GPT-5 latest, every run meant making API calls to a premium model. But, unless I was publishing new posts everyday, running Agent 1 daily like Agent 2 meant repeatedly paying GPT-5 rates to re-analyze the same archive over and over again. In other words, I’d be incurring costs without adding any value most of the time.

The Solution: Align Cadence With Data Freshness

The fix was to pull Agent 1 out of the daily loop and give it its own scenario. Instead of running every day like the rest of the workflow, it now runs twice a month — once in the middle and once at the end. Each run processes the full archive of my work (including any new Substack posts since the last run), generates an updated style guide for Agent 3, and saves it automatically to Dropbox. Then, Agent 3 simply refers to the latest style guide in Dropbox whenever it runs. This design change kept the workflow comprehensive but eliminated the wasted expense of daily Agent 1 runs.

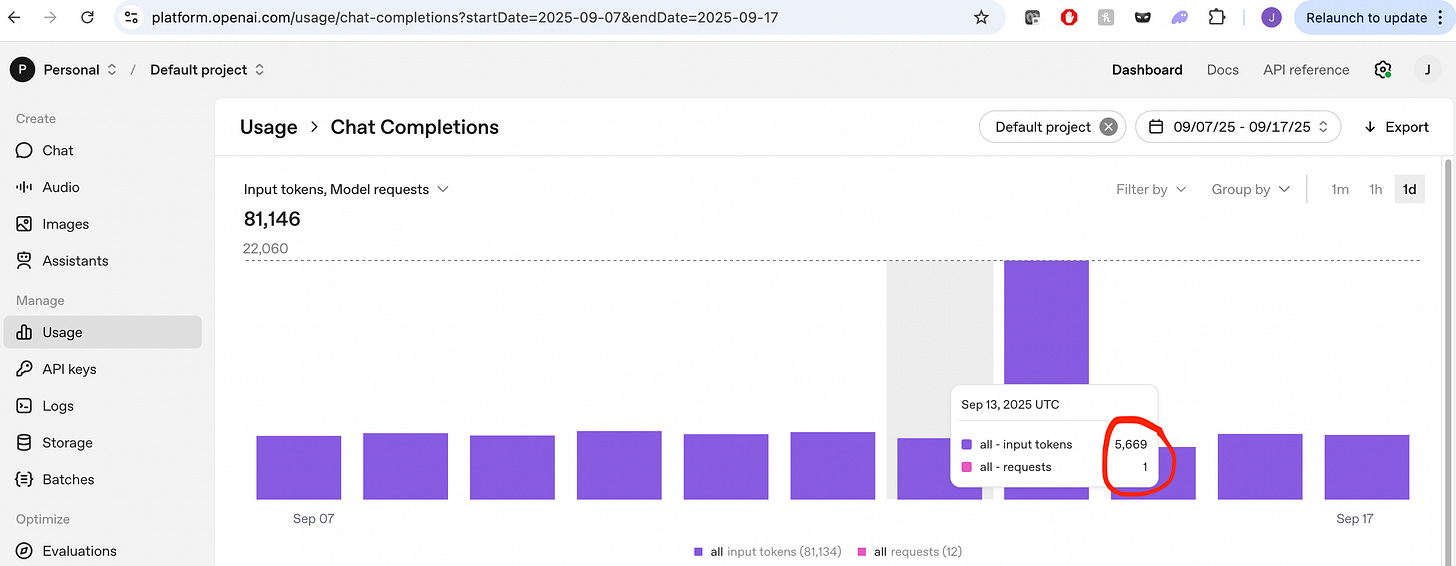

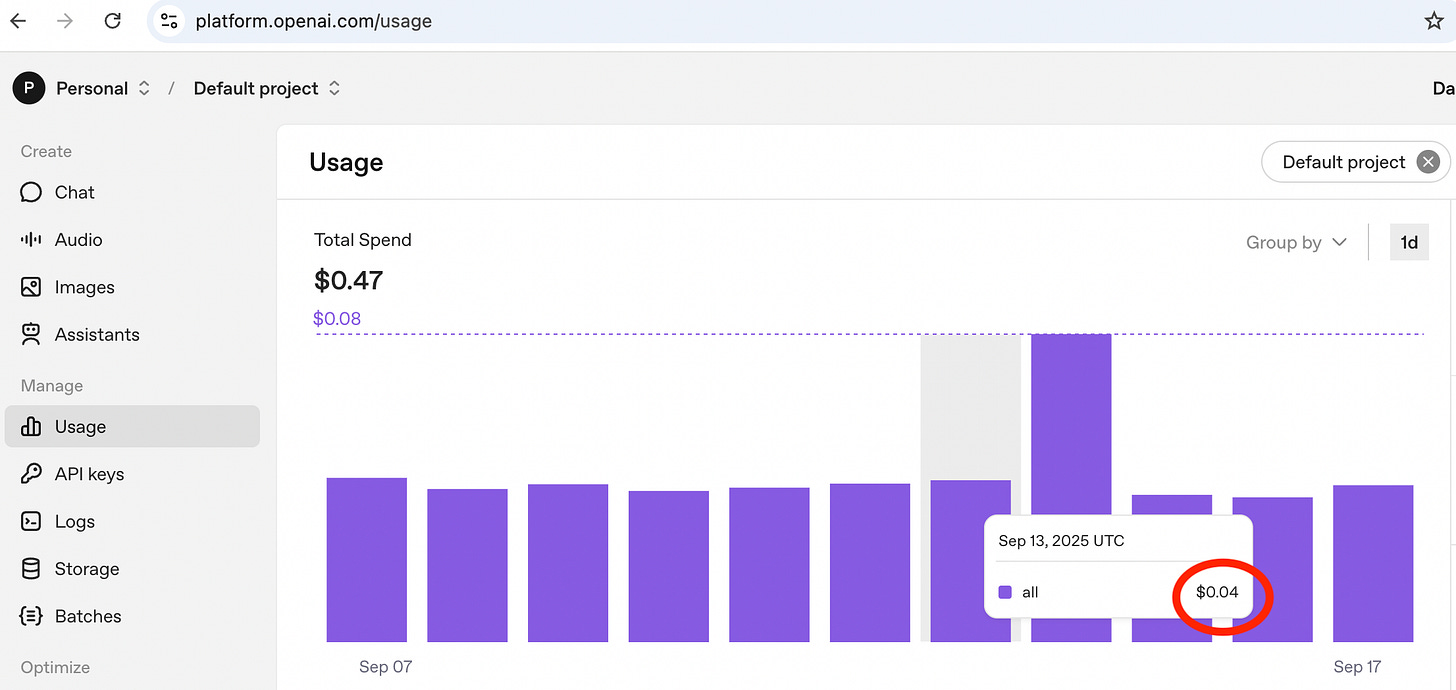

The cost impact showed up clearly in my usage dashboard. On a baseline day (September 13, shown below as example) with the daily workflow running without Agent 1, the system processes about ~6,000 tokens at a cost of $0.04, driven by Agent 3’s GPT-5 calls.

Tokens:

Cost:

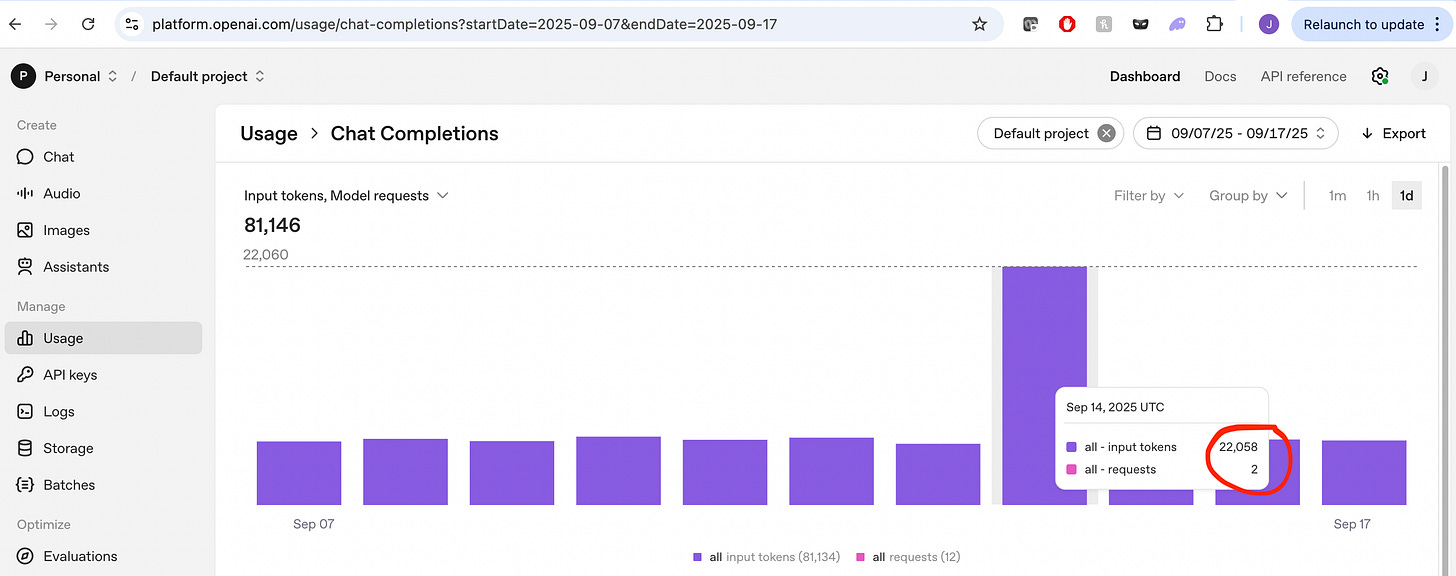

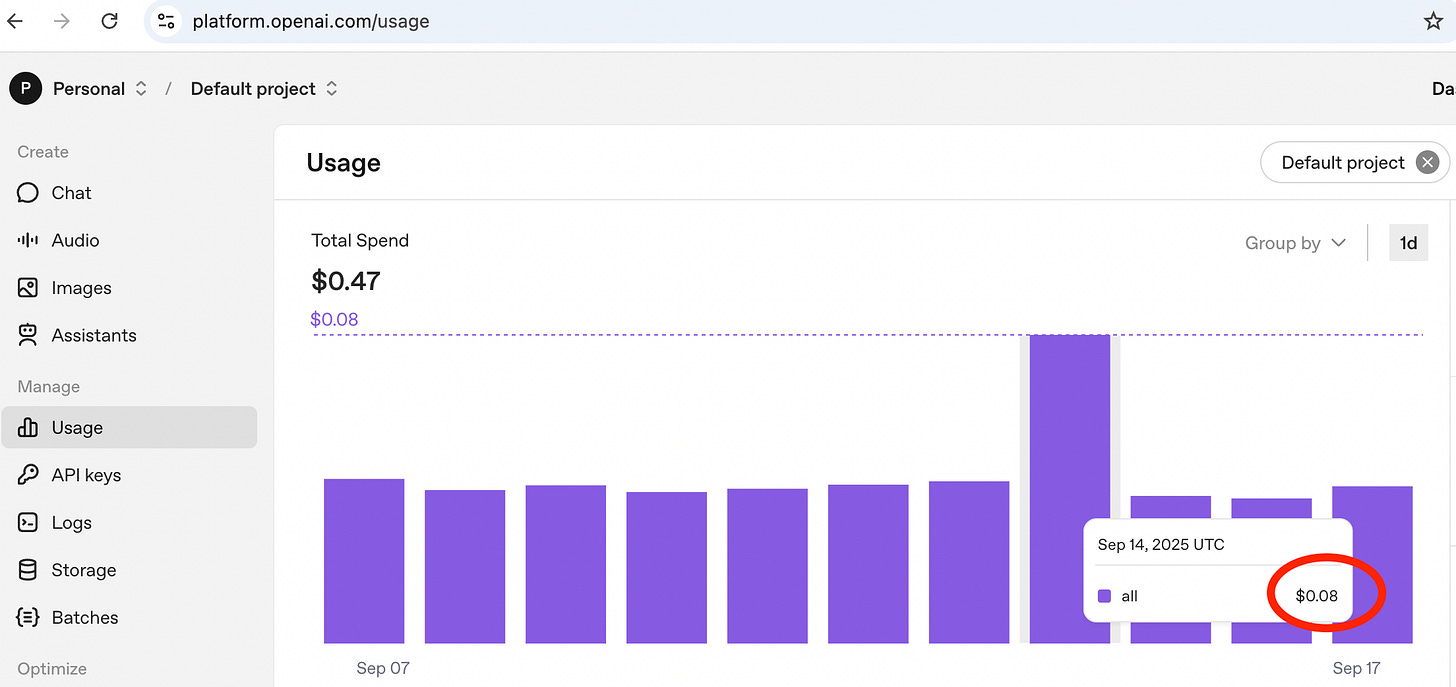

On a day when Agent 1 also runs (September 14), usage spikes to ~22,000 tokens and the cost doubles to $0.08

Tokens:

Cost:

Running Agent 1 nearly quadruples token usage for that day and adds about four cents to the workflow’s total cost.

When you extend that out over a month, the picture becomes clearer:

For Agent 1 alone:

Daily cadence: $0.04 × 30 = $1.20

Bi-monthly cadence: $0.04 × 2 = $0.08

→ ~15x savings on Agent 1’s cost by implementing bi-monthly cadence

For the total workflow:

Daily cadence: $0.08 × 30 = $2.40

Bi-monthly cadence: ($0.04 × 28) + ($0.08 × 2) = $1.28

→ ~50% savings overall by implementing bi-monthly cadence

In dollar terms, the difference may feel small today. But these runs are based on the current size of the MTF, which will only grow as I keep publishing long-form posts. The larger the MTF gets, the more tokens Agent 1 will need to process — and the more expensive those runs will become.

This is why the small architectural shift mattered. It highlighted a broader principle: to optimize cost, execution frequency should match how often the underlying data actually changes.

The Contrast: Zero Cost = Architectural Freedom

While Agent 1 showed me how cadence and cost are tightly linked, Agent 2 highlighted the flip side: when resources are free, you can afford to optimize for quality rather than efficiency.

In my workflow, Agent 2 uses HTTP modules in Make.com to call the NewsAPI, and at my workflow’s scale, those calls are free. That meant I didn’t have to design around cost, but around completeness.

At first, I had Agent 2 use a single HTTP module to fetch a mixed set of 10 articles, which often skewed the results: some days it returned all AI headlines, other days all finance.

Because the API calls carried no cost, the better solution was to split the request into two HTTP modules (two separate NewsAPI calls), both operated by Agent 2: one for AI news, one for finance. Running them in parallel guaranteed balanced coverage every day, giving Agent 3 a stronger input set to work from.

And so the design philosophy Agent 2 taught me was simple: when cost isn’t a constraint, use that freedom to optimize for quality.

Beyond My Workflow: The Cost Lesson

While in my workflow the savings only added up to a few cents, at enterprise scale the same cadence principle determines whether costs stay contained or spiral out of control.

Agent 1 showed me the discipline side of the equation: when resources are expensive, cadence becomes the lever to control spend. Agent 2 highlighted the opposite: when resources are free, cadence gives you the freedom to optimize for quality and breadth. Together, they frame the spectrum of choices every AI agent workflow designer faces.

The same dynamic applies when evaluating AI solution vendors or building internal tools. A vendor might cut costs by using a cheaper model, little reasoning, caching results or reducing refresh frequency. An internal team might over-optimize for efficiency and risk stale outputs, or ignore costs and build infrastructure that won’t scale.

The point isn’t that these trade-offs always happen, but that they could. And they’re easy to miss if you only ask whether the system “works.”

That’s the real lesson. Cost isn’t just a budget line, it’s a design constraint that shapes orchestration. Sometimes it forces restraint, sometimes it grants freedom. The key is knowing which situation you’re in and architecting accordingly.